2021-05-31

In eleventh grade, my U.S. history class had one particularly annoying form of homework: outlines. These dreaded assignments involved summarizing a list of given headers from the course textbook. For months, my classmates and I labored away at these outlines, turning through pages only to reprint their shortened content onto our own. It seemed so easy that a robot could do it!

Wait, could it?

I should make a minor correction to the previous paragraph: I wasn't turning physical pages. Instead, I was clicking through an online textbook. Could I automate this? You can probably guess what the answer was.

I don't have much experience with web development (as evidenced by this site!), and this was also my first Python project, so practically every technology I used was new to me. I managed to sift through the convoluted mess of a DOM structure that made up the online textbook and discover which elements matched up to the content I needed. Once I determined how to reliably parse through page headers, I had to work out how to reach other pages. I achieved this by simulating typing and clicking onto a navigation field and button.

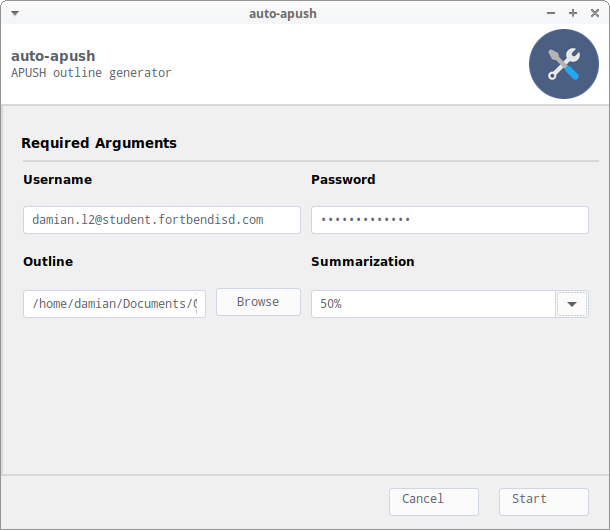

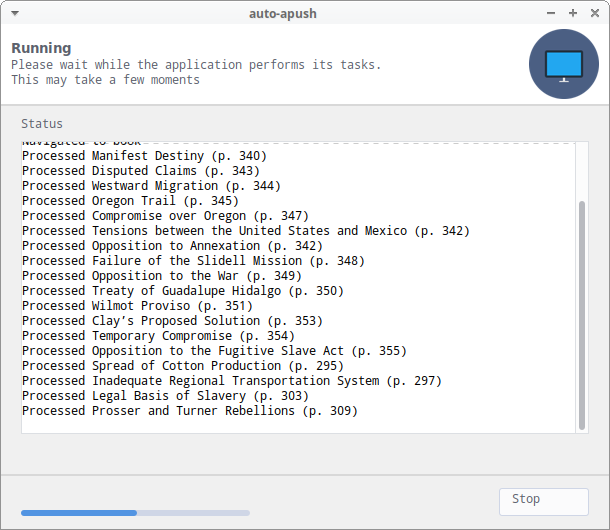

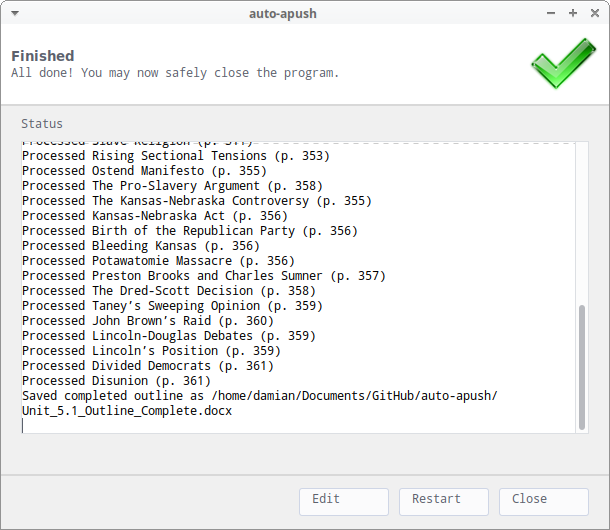

After solving a few Selenium issues, I had the actual scraping part of the program finished. I had accomplished what I set out to do, but I wondered if I could take it even further. I noticed that the homework document describing the headers to outline was consistently structured. A few tutorials later, I had a Word library parsing these documents, extracting the headers and page numbers, and feeding them directly into the scraper. Now that I had gotten comfortable with interacting with documents, it was a simple matter of changing the output format from the console to a nicely formatted document. By the end of it, all the program required was the path to the assignment document as an argument. A minute or so later, it would spit out a completed assignment (minus the summarization, which I added in later). Still, I wondered if I could make the process even simpler. After plugging in a GUI script wrapper, this was the end result. I'm not showing any pictures of the scraper-controlled browser or the resulting document just to stay on the safe side of copyright, but you get the idea.

With the program complete, I packaged it up into an executable. Due to issues with wheel files and system XML libraries, I wasn't able to build a Windows binary, although running the script in a normal Python environment worked fine. I had a few people ask me about getting it working, to which I offered the two solutions of running the script directly or finding a way to run the original binary. I have no idea if anyone else actually managed to use it, which was probably for the best. Still, this was nowhere near the riskier programs I built in high school, which I'll write about in the next post.